影视场景中的演员识别

在观看电视剧时,我注意到会自动显示演员姓名。受此启发,我使用 AI 技术开发了自己的解决方案。

在观看电视剧时,我注意到一个有趣的功能,即在每个场景中显示演员姓名。我受到启发,使用 AI 技术开发了自己的解决方案,以实现相同的功能。

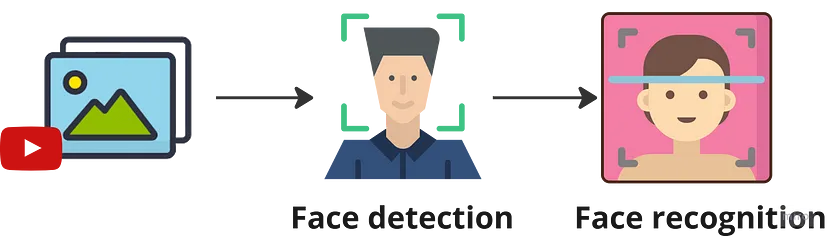

方法很简单:它使用人脸检测来提取演员的脸部,然后进行人脸识别来预测演员的名字。我使用 FaceNet 模型进行人脸检测和嵌入。

对于人脸识别部分,我通过了少样本学习来嵌入一些演员的脸部。有关更多信息,你可以阅读我之前的文章通过少样本学习释放图像分类的潜力。

在本文中,我选择了 Prime Video 平台上的韩剧《嫁给我的丈夫》来向你展示这个过程:

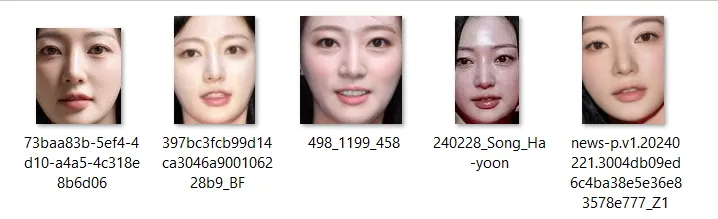

1、准备支持集

在此步骤中,我为剧中的所有演员准备了裁剪后的脸部图像,每个演员仅使用 5 张图片。

2、从 YouTube 下载视频

为了进行此演示,我使用Python 库 pytube 下载视频片段:

pip install pytubefix下载视频的代码:

import os

from pytubefix import YouTube

from pytubefix.cli import on_progress

def download_video(video_link: str, downloaded_video_path: str):

yt = YouTube(video_link, on_progress_callback=on_progress)

ys = yt.streams.get_highest_resolution()

if not os.path.exists(downloaded_video_path):

os.makedirs(downloaded_video_path)

ys.download(downloaded_video_path)3、将支持集中的人脸嵌入到向量中

我使用 FaceNet 模型,使用 keras-facenet 库从演员的面部中提取特征:

pip install keras-facenet模型初始化:

from keras_facenet import FaceNet

embedder = FaceNet()预处理图像和嵌入:

import os

import numpy as np

from typing import Tuple, List

# Source: https://dev.to/abhinowww/how-to-build-a-face-recognition-system-using-facenet-in-python-27kh

def preprocess(image: np.array) -> np.array:

image = cv2.resize(image, (160, 160))

image = np.expand_dims(image, axis=0)

return image

def get_support_set_vector(support_set_dir: str) -> Tuple[np.array, List[str]]:

actor_names = []

support_set_vectors = []

for actor_name in os.listdir(support_set_dir):

actor_names.append(actor_name)

actor_dir = os.path.join(support_set_dir, actor_name)

actor_features = []

for image_file in os.listdir(actor_dir):

image = cv2.imread(os.path.join(actor_dir, image_file))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image = preprocess(image)

feature = embedder.embeddings(image)

actor_features.append(feature[0])

support_set_vectors.append(np.mean(actor_features, axis=0))

return np.array(support_set_vectors), actor_names

support_set_vectors, actor_names = get_support_set_vector("./support_sets")

4、欧几里得距离

为了比较目标人脸与支持集中的人脸之间的相似度,我采用欧几里得距离:

def calculate_query_distance(

query_vector: np.array,

support_set_vectors: np.array

) -> float:

distances = np.linalg.norm(support_set_vectors - query_vector, axis=1)

return distances5、检测并识别演员

整合在一起,下面的代码检测视频中的人脸并识别是哪位演员:

video_path = os.path.join(

downloaded_video_path,

os.listdir(downloaded_video_path)[0]

)

video_capture = cv2.VideoCapture(video_path)

detection_threshold = 0.75

# Define font

font = cv2.FONT_HERSHEY_DUPLEX

font_scale = 0.5

font_color = (255, 255, 255)

font_thickness = 1

output_frames = []

success = True

while success:

success, image = video_capture.read()

if image is None:

continue

detections = embedder.extract(image, threshold=detection_threshold)

position = (10, 30)

y_offset = 0

for det in detections:

bbox = det["box"]

x1 = bbox[0]

y1 = bbox[1]

x2 = bbox[0] + bbox[2]

y2 = bbox[1] + bbox[3]

face = image[y1:y2, x1:x2, :]

face = preprocess(face)

face_feature = embedder.embeddings(face)

distances = calculate_query_distance(face_feature[0], support_set_vectors)

most_similar_indices = np.argsort(distances)

actor_name = actor_names[most_similar_indices[0]]

cv2.putText(

image,

actor_name,

(position[0], position[1] + y_offset),

font,

font_scale,

font_color,

font_thickness,

lineType=cv2.LINE_AA

)

y_offset += int(40 * font_scale)

output_frames.append(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))6、视频叠加识别结果

为了将结果与原始视频相结合,我使用了 MoviePy 库:

pip install moviepy创建最终视频。注意:这代码是由 ChatGPT 生成的。

import moviepy.editor as mp

from moviepy.video.io.VideoFileClip import VideoFileClip

# Load the original video and extract audio

original_video = VideoFileClip(video_path)

audio = original_video.audio

fps = original_video.fps

# Create a video from annotated frames

annotated_clip = mp.ImageSequenceClip(output_frames, fps=fps)

annotated_clip = annotated_clip.set_audio(audio) # Add the original audio

# Export the final video

annotated_clip.write_videofile(

"/content/output_with_actor_name.mp4",

codec="libx264",

fps=fps,

audio_codec="aac"

)以下是最终输出的示例:

原文链接:How to Recognize Actor Names in Each Movie Scene Using AI

汇智网翻译整理,转载请表明出处