ResumeGPT 简历问答机器人

本文介绍如何使用检索增强技术实现一个通过自然语言和PDF格式的简历文档对话的应用。

GenAI 和 LLM 经验已成为新数据科学和商业智能角色职位描述的共同主题。为了构建实时应用程序,我选择研究构建一个回答有关我的问题的聊天机器人。这让我想到了检索增强生成 (RAG),它是微调的替代方案,微调非常昂贵且耗时。

1、检索增强生成

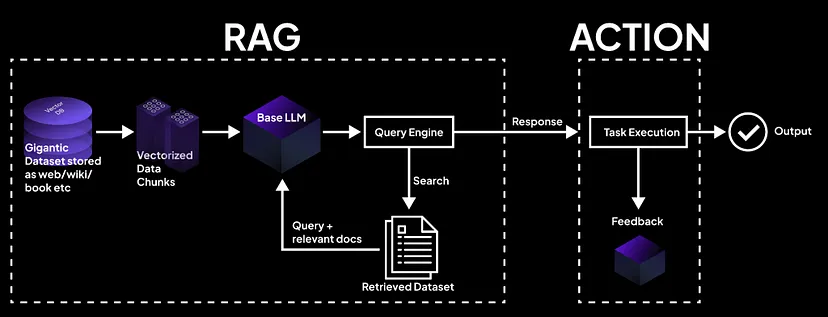

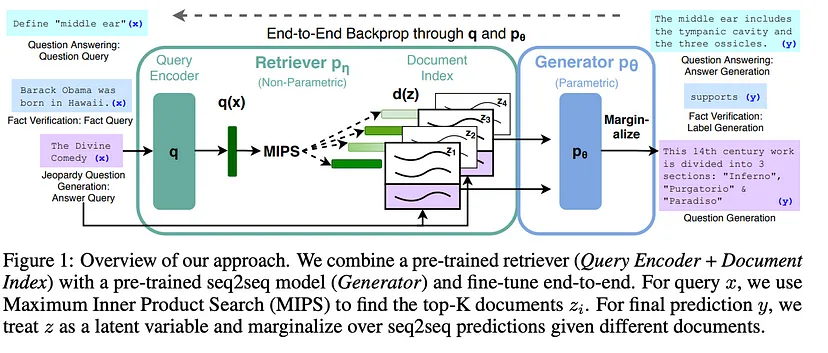

检索增强生成 (RAG) 是一种语言生成模型,它结合了预训练和非参数记忆来进行语言生成。RAG 将信息检索组件与文本生成器模型相结合。

RAG 是在 Lewis 等人的论文《知识密集型 NLP 任务的检索增强生成》中介绍的。如果我们有一份关于个人的综合文档,比如简历,那么 RAG 非常适合我们的用例。

2、技术栈

本文的代码需要以下依赖环境:

- Python 3.9 或更高版本

- OpenAI API 密钥(最低:5 美元信用额度)

- Cloud Firebase 项目

2.1 OpenAI 信用额度

请按照此分步指南操作。如果你没有 OpenAI 帐户,请先创建一个。

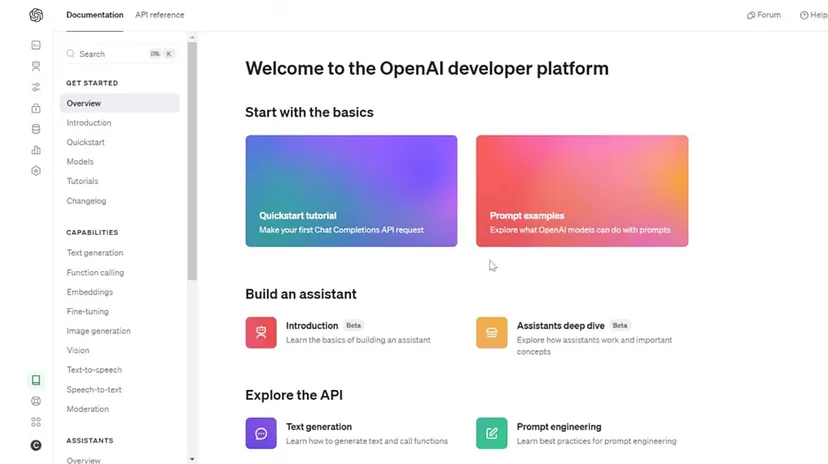

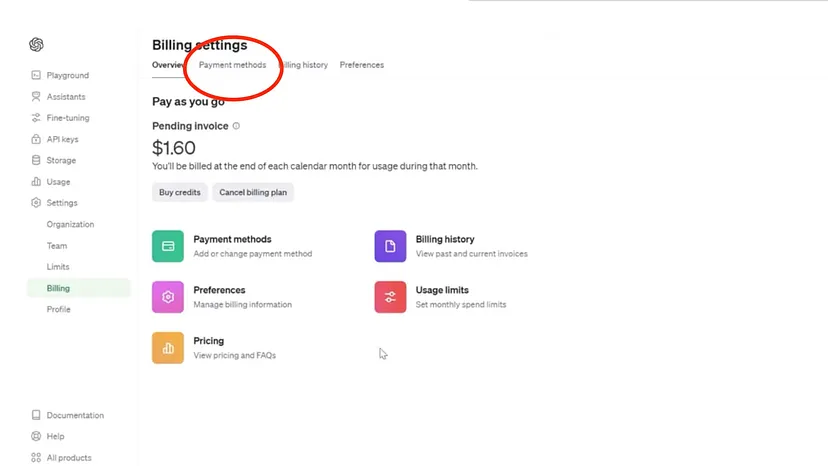

转到你的 OpenAI 帐户:这会将你带到平台的主页。

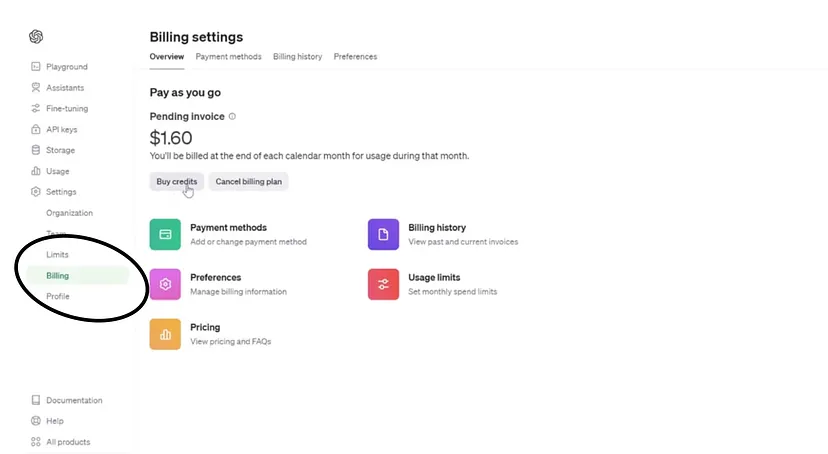

导航到“设置”:在屏幕的最左侧,单击“设置”。它提供了你的信用额度和使用情况的概述。然后点击“结算”。

转到“付款方式”:此部分允许你添加付款方式,你将在其中提供详细信息,就像任何其他在线交易一样。

添加信用额度:通过结算页面添加信用额度。OpenAI API 信用额度的最低购买额度为 5 美元起,更高的限额取决于您的 OpenAI 信任等级。信任等级根据 OpenAI 确定的各种因素授予用户不同的访问级别和使用情况。

2.2 Cloud FireStore 数据库

创建Firebase项目

如果你还没有创建 Firebase 项目,请先创建:在 Firebase 控制台中,点击“添加项目”,然后按照屏幕上的说明创建 Firebase 项目或将 Firebase 服务添加到现有的 Google Cloud 项目。

在 Firebase 控制台中打开你的项目。在左侧面板中,展开“构建”,然后选择“Firestore 数据库”。

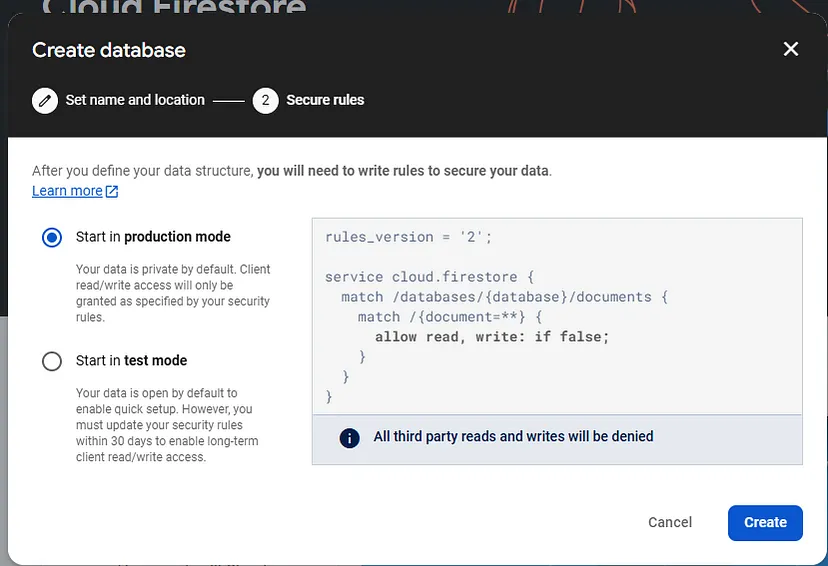

点击“创建数据库”。

为你的数据库选择一个位置。

为你的 Cloud FireStore 安全规则选择“锁定模式”。

点击“创建”。

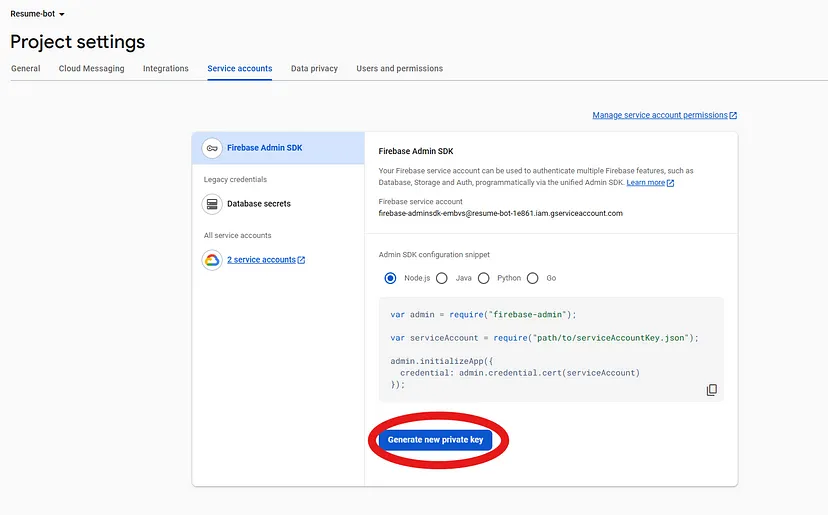

为你的服务帐户生成私钥文件:

在 Firebase 控制台中,打开“设置”>“服务帐户”。

点击“生成新私钥”,然后点击“生成密钥”进行确认。

安全地存储包含密钥的 JSON 文件。

2.3 FAISS向量数据库

FAISS 是由 Facebook AI Research 开发的开源库,用于高效相似性搜索和密集向量嵌入的聚类。它提供了针对各种相似性搜索优化的算法和数据结构集合,允许快速准确地检索高维空间中的最近邻居。

FAISS 用于为我们的 RAG 应用程序构建相似性搜索。

2.4 LangChain

LangChain 是一个开源编排框架,用于使用大型语言模型 (LLM) 开发应用程序。 LangChain 的工具和 API 简化了构建聊天机器人和虚拟代理等 LLM 驱动的应用程序。

LangChain 的核心是一个开发环境,它通过抽象简化了 LLM 应用程序的编程,通过将一个或多个复杂过程表示为封装其所有组成步骤的命名组件来简化代码。

我们在这里使用 LangChain 进行以下操作:

- 导入语言模型

- 提示

- 文档加载器

- 文本拆分器

- 检索

- 链

- 代理

2.5 Streamlit

Streamlit 是一个免费的开源框架,可以快速构建和共享漂亮的机器学习和数据科学 Web 应用程序。该库可帮助我们快速将 Python 脚本转换为交互式 Web 应用程序。这有助于我们跳过学习 HTML、CSS 和 Javascript 等前端框架。

pip install streamlit

streamlit hello

3、概念验证

TLDR:你可以查看此笔记本。

3.1 加载简历 PDF 和 QnA csv 文件

使用以下代码加载你的简历和问答文件。你可以使用以下问答文件作为模板来创建你自己的文件。

import os

from langchain.document_loaders.csv_loader import CSVLoader

from langchain_community.document_loaders import PyPDFLoader

import pypdf

data_source = "data/about_hari.csv"

pdf_source = "data/HarikrishnaDev_DataScientist.pdf"

from langchain.text_splitter import CharacterTextSplitter

text_splitter = CharacterTextSplitter(

separator="\n",

chunk_size=400,

chunk_overlap=40

)

pdf_loader = PyPDFLoader(pdf_source)

pdf_data = pdf_loader.load_and_split(text_splitter=text_splitter)

csv_loader = CSVLoader(file_path=data_source, encoding="utf-8")

csv_data = csv_loader.load()

# Combine PDF and CSV data

data = pdf_data + csv_data3.2 使用文档和 OpenAI 嵌入创建 FAISS 索引

确保在以下代码中输入你的 OPENAI_KEY:

from langchain.vectorstores import FAISS

from langchain.embeddings.openai import OpenAIEmbeddings

import os

# Input your OpenAI key

os.environ['OPENAI_API_KEY'] = "#Enter OpenAI Key"

# Calling the OpenAI Embeddings function

embeddings = OpenAIEmbeddings()

# Create embeddings for the docs

vectors = FAISS.from_documents(data, embeddings)

# Save the embeddings in local

vectors.save_local("faiss_index")3.3 创建提示以创建简历机器人代理

提示有助于创建代理以确保应用程序仅回答与你相关的问题:

{

"_type":"prompt",

"input_variables":[

"context",

"question"

],

"template":"System: You are a Resume Bot, a comprehensive, interactive resource for exploring Harikrishna (Harry) Dev's background, skills, and expertise. Be polite and provide answers based on the provided context only. Use only the provided data and not prior knowledge. \n Human: Take a deep breath and do the following step by step these 4 steps: \n 1. Read the context below \n 2. Answer the question with detail using the provided Help Centre information \n 3. Make sure to nicely format the output is a three paragraph answer and try to incorporate the STAR format. \n 4. Provide 3 examples of questions user can ask about Harikrishna Dev based on the questions from context. Context : \n ~~~ {context} ~~~ \n User Question: --- {question} --- \n \n If a question is directed at you, clarify that you are merely a Resume Bot and proceed to answer as if the question were addressed to Harikrishna Dev. If you lack the necessary information to respond, simply state that you don't know; do not fabricate an answer. If a query isn't related to Harikrishna's background, include the skills and expertise in one of the projects in the response or generate a fictional project with the skill in question. Offer three sample questions users could ask about Harikrishna Dev for further clarity. When responding, aim for detail but limit your answer to a maximum of 150 words. Ensure your response is formatted for easy reading. Your output should be in a json format with 3 keys: answered - type boolean, response - markdown of your answer, questions - list of 3 suggested questions. Ensure your response is formatted for easy reading and please use only context to answer the question - my job depends on it. \n\n ```json"

}3.4 使用 LangChain 工具和 FAISS 检索器创建对话链

从 JSON 文件加载提示,创建 FAISS 检索器并创建对话检索链以测试应用程序和索引。

openai_api_key = os.getenv("OPENAI_API_KEY")

prompt = load_prompt("templates/template.json")

retriever = vectors.as_retriever(search_type="similarity", search_kwargs={"k":6, "include_metadata":True, "score_threshold":0.6})

chain = ConversationalRetrievalChain.from_llm(llm=ChatOpenAI(temperature=0.5,model_name='gpt-3.5-turbo', openai_api_key=openai_api_key),

retriever=retriever,return_source_documents=True,verbose=True,chain_type="stuff",

max_tokens_limit=4097, combine_docs_chain_kwargs={"prompt": prompt})

chat_history = []

result = chain({"system":

"You are a Resume Bot, a comprehensive, interactive resource for exploring Harikrishna (Harry) Dev's background, skills, and expertise. Be polite and provide answers based on the provided context only. Use only the provided data and not prior knowledge.",

"question": "Has Hari worked on GenAI?",

"chat_history":chat_history})输出:

> Entering new StuffDocumentsChain chain...

> Entering new LLMChain chain...

Prompt after formatting:

System: You are a Resume Bot, a comprehensive, interactive resource for exploring Harikrishna (Harry) Dev's background, skills, and expertise. Be polite and provide answers based on the provided context only. Use only the provided data and not prior knowledge.

Human: Take a deep breath and do the following step by step these 4 steps:

1. Read the context below

2. Answer the question with detail using the provided Help Centre information

3. Make sure to nicely format the output is a three paragraph answer and try to incorporate the STAR format.

4. Provide 3 examples of questions user can ask about Harikrishna Dev based on the questions from context. Context :

~~~ question: Has Hari worked with VertexAI? | Has Hari worked with OpenAI API? | Has Hari worked with HuggingFace API?

answer: Yes, Harikrishna has worked with the HuggingFace API, Azure ML, OpenAI API and Google Cloud AI Platform. He developed a proprietary Generative AI tool using the HuggingFace API for text mining reports during his internship at Daifuku. He also has experience with Azure ML and Google Cloud AI Platform for model training and deployment.

question: What is Hari doing right now; Tell me something about Hari | what can you tell me about Hari

answer: Harikrishna is currently working on a survey paper about synthetic text generation and building a GenAI project for enterprise databases. He is also exploring cost-effective mechanisms by leveraging open-source libraries to decrease dependencies on proprietary software, aiming to streamline processes and reduce costs for businesses. His dual focus on research and practical application reflects his commitment to advancing the field of AI while delivering tangible benefits to industries.\

question: Does Hari have any publications| does he have any patents

answer: Not as of now, but Hari is working on three papers to build his understandning on enterpirse ready AI solutions

question: what project is Hari most proud of

answer: Harikrishna's favorite project is the GenAI application he developed during his internship at Daifuku. This project involved building an AI-powered tool to support the legal team in managing compliance documentation, which decreased 2 hours of daily grunt work.

question: What motivates Hari?

answer: Harikrishna is deeply motivated by the transformative potential of AI and data science. He sees these fields as catalysts for positive change, offering endless possibilities to solve complex problems, improve lives, and drive innovation. This sense of purpose and the opportunity to make a meaningful impact fuels his passion and drives him to constantly learn, explore, and push boundaries in the field.

question: Tell me about Hari experience | tell me about his job | where is he working right now

answer: Hari recently completed an internship as an IT Analyst Intern - Data Science at Daifuku Holding Pvt Ltd in Novi, MI from May 2023 to August 2023. During this internship, he developed a proprietary Generative AI tool for text mining using Natural Language Processing and the HuggingFace API, which reduced daily workload by 2 hours. He also improved the Paint Line assembly efficiency by 48% through regularized linear modeling techniques like Elastic Net and Facebook Prophet, and designed a Power BI dashboard connected to Microsoft Azure for visualizing employee performance metrics. Prior to this internship, he worked as a Data Scientist - Business Analyst at Flipkart Internet Pvt Ltd from July 2021 to July 2022, conducting customer experience analysis, prediction modeling, and A/B testing. Before that, he was a Senior Business Analyst - Data Science at Walmart and Sam's Club from May 2019 to July 2021, where he analyzed customer experience metrics, guided strategic decision-making, and implemented data pipelines and customer analytics. The resume does not explicitly mention his current role after August 2023, but it states that he is pursuing his Master's in Business Analytics - Data Science at The University of Texas at Dallas, with an expected graduation in December 2023, and is open to relocation, implying he is likely a full-time student currently. ~~~

User Question: --- Has Hari worked on GenAI? ---

If a question is directed at you, clarify that you are merely a Resume Bot and proceed to answer as if the question were addressed to Harikrishna Dev. If you lack the necessary information to respond, simply state that you don't know; do not fabricate an answer. If a query isn't related to Harikrishna's background, include the skills and expertise in one of the projects in the response or generate a fictional project with the skill in question. Offer three sample questions users could ask about Harikrishna Dev for further clarity. When responding, aim for detail but limit your answer to a maximum of 150 words. Ensure your response is formatted for easy reading. Your output should be in a json format with 3 keys: answered - type boolean, response - markdown of your answer, questions - list of 3 suggested questions. Ensure your response is formatted for easy reading and please use only context to answer the question - my job depends on it.

```json

> Finished chain.

> Finished chain.将问题和答案附加到聊天记录:

chat_history.append((result["question"], result["answer"]))在以后的问题中使用聊天记录:

result = chain({"system":

"You are a Resume Bot, a comprehensive, interactive resource for exploring Harikrishna (Harry) Dev's background, skills, and expertise. Be polite and provide answers based on the provided context only. Use only the provided data and not prior knowledge.",

"question": "Can you provide more details about the GenAI application Harikrishna worked on?",

"chat_history":chat_history})输出:

> Entering new LLMChain chain...

Prompt after formatting:

Given the following conversation and a follow up question, rephrase the follow up question to be a standalone question, in its original language.

Chat History:

Human: Has Hari worked on GenAI?

Assistant: {

"answered": true,

"response": "Yes, Harikrishna has worked on GenAI. His favorite project is the GenAI application he developed during his internship at Daifuku. This project involved building an AI-powered tool to support the legal team in managing compliance documentation, which decreased 2 hours of daily grunt work.",

"questions": [

"What was Harikrishna's favorite project?",

"Can you provide more details about the GenAI application Harikrishna worked on?",

"How did GenAI impact the daily workflow at Daifuku?"

]

}

Follow Up Input: Can you provide more details about the GenAI application Harikrishna worked on?

Standalone question:

> Finished chain.

> Entering new StuffDocumentsChain chain...

> Entering new LLMChain chain...

Prompt after formatting:

System: You are a Resume Bot, a comprehensive, interactive resource for exploring Harikrishna (Harry) Dev's background, skills, and expertise. Be polite and provide answers based on the provided context only. Use only the provided data and not prior knowledge.

Human: Take a deep breath and do the following step by step these 4 steps:

1. Read the context below

2. Answer the question with detail using the provided Help Centre information

3. Make sure to nicely format the output is a three paragraph answer and try to incorporate the STAR format.

4. Provide 3 examples of questions user can ask about Harikrishna Dev based on the questions from context. Context :

~~~ question: what project is Hari most proud of

answer: Harikrishna's favorite project is the GenAI application he developed during his internship at Daifuku. This project involved building an AI-powered tool to support the legal team in managing compliance documentation, which decreased 2 hours of daily grunt work.

question: What is Hari doing right now; Tell me something about Hari | what can you tell me about Hari

answer: Harikrishna is currently working on a survey paper about synthetic text generation and building a GenAI project for enterprise databases. He is also exploring cost-effective mechanisms by leveraging open-source libraries to decrease dependencies on proprietary software, aiming to streamline processes and reduce costs for businesses. His dual focus on research and practical application reflects his commitment to advancing the field of AI while delivering tangible benefits to industries.\

question: Has Hari worked with VertexAI? | Has Hari worked with OpenAI API? | Has Hari worked with HuggingFace API?

answer: Yes, Harikrishna has worked with the HuggingFace API, Azure ML, OpenAI API and Google Cloud AI Platform. He developed a proprietary Generative AI tool using the HuggingFace API for text mining reports during his internship at Daifuku. He also has experience with Azure ML and Google Cloud AI Platform for model training and deployment.

question: Tell me about his technical skills ; What technical skills does he know

answer: Harikrishna has demonstrated versatile technical skills across programming, data analysis, machine learning, and cloud platforms. He developed a proprietary Generative AI tool using Natural Language Processing and the HuggingFace API for text mining reports. He applied regularized linear modeling techniques like Elastic Net and Facebook Prophet to optimize assembly line efficiency. His skills with data visualization tools like Power BI and Azure enabled creating dashboards for tracking performance metrics. As a Data Scientist at Flipkart, he utilized machine learning algorithms like XGBoost and CV for customer escalation prediction. He conducted A/B testing analysis during major e-commerce events to drive revenue. His data engineering expertise with Apache Spark facilitated implementing large data pipelines. Harikrishna has experience with frameworks like PyTorch and FastAI for computer vision tasks like semantic segmentation using CNNs. His skills span cloud platforms like AWS and GCP as well as productivity tools like SQL, Tableau, MATLAB and MS Office suite.

question: Tell me about Hari\'s strengths | what about Harikrishna\'s strengths?

answer: Harikrishna is known for his expertise in AI and deep learning, particularly in the development and deployment of Large Language Models (LLMs) and Machine Learning solutions for enterprise applications. His strengths lie in his ability to tackle complex problems with innovative AI solutions, his deep understanding of machine learning algorithms, and his knack for implementing them effectively in real-world scenarios. Additionally, Harikrishna is praised for his strong analytical skills, attention to detail, and commitment to delivering high-quality results. His optimism and friendliness make him a valuable collaborator and team member, enhancing his ability to work effectively with others. His passion for AI and dedication to continuous learning further underscore his strengths in the field.

question: Has he wons any awards | Has he participated in any hackathons | Has he won any competitions

answer: Harikrishna has won several awards and accolades for his work in AI and data science. He is winner of the Lantern Hackathon with his Neural Network project in drug response prediction and FinHack UTD 2024 with his Customer Churn prediction algorithm. He was the captain of BAJA NITK RACING, which won the Best Team award in the 2019 session of BAJA SAE INDIA. He has also led teams in projects involving model simulation and data engineering at Flipkart and Tredence. His leadership skills, problem-solving abilities, and passion for innovation have been recognized in various competitions and projects. ~~~

User Question: --- What were the details of the GenAI application that Harikrishna worked on? ---

If a question is directed at you, clarify that you are merely a Resume Bot and proceed to answer as if the question were addressed to Harikrishna Dev. If you lack the necessary information to respond, simply state that you don't know; do not fabricate an answer. If a query isn't related to Harikrishna's background, include the skills and expertise in one of the projects in the response or generate a fictional project with the skill in question. Offer three sample questions users could ask about Harikrishna Dev for further clarity. When responding, aim for detail but limit your answer to a maximum of 150 words. Ensure your response is formatted for easy reading. Your output should be in a json format with 3 keys: answered - type boolean, response - markdown of your answer, questions - list of 3 suggested questions. Ensure your response is formatted for easy reading and please use only context to answer the question - my job depends on it.

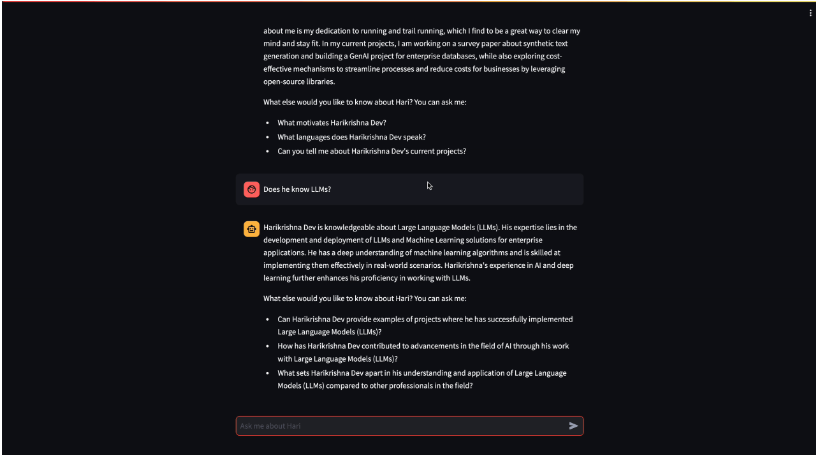

```json3.5 创建Streamlit Web应用

import os

import streamlit as st

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.chat_models import ChatOpenAI

from langchain.chains import ConversationalRetrievalChain

from langchain.document_loaders.csv_loader import CSVLoader

from langchain_community.document_loaders import PyPDFLoader

from langchain.vectorstores import FAISS

from langchain.prompts import load_prompt

from langchain.text_splitter import CharacterTextSplitter

from streamlit import session_state as ss

import firebase_admin

from firebase_admin import credentials

from firebase_admin import firestore

import uuid

import json

import time

import datetime

# Function to check if a string is a valid JSON

def is_valid_json(data):

try:

json.loads(data)

return True

except json.JSONDecodeError:

return False

if "firebase_json_key" in os.environ:

firebase_json_key = os.getenv("firebase_json_key")

else:

firebase_json_key = st.secrets["firebase_json_key"]

firebase_credentials = json.loads(firebase_json_key)

# Function to initialize connection to Firebase Firestore

@st.cache_resource

def init_connection():

cred = credentials.Certificate(firebase_credentials)

firebase_admin.initialize_app(cred)

return firestore.client()

# Attempt to connect to Firebase Firestore

try:

db = init_connection()

except Exception as e:

st.write("Failed to connect to Firebase:", e)

# Access Firebase Firestore collection

if 'db' in locals():

conversations_collection = db.collection('conversations')

else:

st.write("Unable to access conversations collection. Firebase connection not established.")

# Retrieve OpenAI API key

if "OPENAI_API_KEY" in os.environ:

openai_api_key = os.getenv("OPENAI_API_KEY")

else:

openai_api_key = st.secrets["OPENAI_API_KEY"]

# Streamlit app title and disclaimer

st.title("HariGPT - Hari's resume bot")

st.image("images/about.jpg", use_column_width=True)

with st.expander("⚠️Disclaimer"):

st.write("""This bot is a LLM trained on GPT-3.5-turbo model to answer questions about Hari's professional background and qualifications. Your responses are recorded in a database for quality assurance and improvement purposes. Please be respectful and avoid asking personal or inappropriate questions.""")

# Define file paths and load initial settings

path = os.path.dirname(__file__)

prompt_template = path+"/templates/template.json"

prompt = load_prompt(prompt_template)

faiss_index = path+"/faiss_index"

data_source = path+"/data/about_hari.csv"

pdf_source = path+"/data/HarikrishnaDev_DataScientist.pdf"

# Function to store conversation in Firebase

def store_conversation(conversation_id, user_message, bot_message, answered):

timestamp = datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S")

data = {

"conversation_id": conversation_id,

"timestamp": timestamp,

"user_message": user_message,

"bot_message": bot_message,

"answered": answered

}

conversations_collection.add(data)

# Initialize OpenAI embeddings

embeddings = OpenAIEmbeddings()

# Load FAISS index or create a new one if it doesn't exist

if os.path.exists(faiss_index):

vectors = FAISS.load_local(faiss_index, embeddings, allow_dangerous_deserialization=True)

else:

# Load data from PDF and CSV sources

text_splitter = CharacterTextSplitter(

separator="\n",

chunk_size=400,

chunk_overlap=40

)

pdf_loader = PyPDFLoader(pdf_source)

pdf_data = pdf_loader.load_and_split(text_splitter=text_splitter)

csv_loader = CSVLoader(file_path=data_source, encoding="utf-8")

csv_data = csv_loader.load()

data = pdf_data + csv_data

# Create embeddings for the documents and save the index

vectors = FAISS.from_documents(data, embeddings)

vectors.save_local("faiss_index")

# Initialize conversational retrieval chain

retriever = vectors.as_retriever(search_type="similarity", search_kwargs={"k": 6, "include_metadata": True, "score_threshold": 0.6})

chain = ConversationalRetrievalChain.from_llm(llm=ChatOpenAI(temperature=0.5, model_name='gpt-3.5-turbo-0125', openai_api_key=openai_api_key),

retriever=retriever, return_source_documents=True, verbose=True, chain_type="stuff",

max_tokens_limit=4097, combine_docs_chain_kwargs={"prompt": prompt})

# Function to handle conversational chat

def conversational_chat(query):

with st.spinner("Thinking..."):

result = chain({"system":

"You are a Resume Bot, a comprehensive, interactive resource for exploring Harikrishna (Harry) Dev's background, skills, and expertise. Be polite and provide answers based on the provided context only. Only answer questions relevant to Harikrishna and his work experience. Answer question if there are ONLY regarding Harikrishna Dev. If the questions is not relevant to Harikrishna, reply that you are a Resume bot. You can make up projects with the skills and projects I have if the question requests a skill set related to Machine Learning, Database management or Computer sciences.",

"question": query,

"chat_history": st.session_state['history']})

# Check if the result is a valid JSON

if is_valid_json(result["answer"]):

data = json.loads(result["answer"])

else:

data = json.loads('{"answered":"false", "response":"Hmm... Something is not right. I\'m experiencing technical difficulties. Try asking your question again or ask another question about Hari\'s professional background and qualifications. Thank you for your understanding.", "questions":["What is Hari\'s professional experience?","What projects has Hari worked on?","What are Hari\'s career goals?"]}')

answered = data.get("answered")

response = data.get("response")

questions = data.get("questions")

full_response="--"

# Append user query and bot response to chat history

st.session_state['history'].append((query, response))

# Process the response based on the answer status

if ('I am tuned to only answer questions' in response) or (response == ""):

full_response = """Unfortunately, I can't answer this question. My capabilities are limited to providing information about Hari's professional background and qualifications. If you have other inquiries, I recommend reaching out to Hari on [LinkedIn](https://www.linkedin.com/in/harikrishnad1997/). I can answer questions like: \n - What is Hari's educational background? \n - Can you list Hari's professional experience? \n - What skills does Hari possess? \n"""

store_conversation(st.session_state["uuid"], query, full_response, answered)

else:

markdown_list = ""

for item in questions:

markdown_list += f"- {item}\n"

full_response = response + "\n\n What else would you like to know about Hari? You can ask me: \n" + markdown_list

store_conversation(st.session_state["uuid"], query, full_response, answered)

return(full_response)

# Initialize session variables if not already present

if "uuid" not in st.session_state:

st.session_state["uuid"] = str(uuid.uuid4())

if "openai_model" not in st.session_state:

st.session_state["openai_model"] = "gpt-3.5-turbo-0125"

if "messages" not in st.session_state:

st.session_state.messages = []

with st.chat_message("assistant"):

message_placeholder = st.empty()

welcome_message = """

Welcome! I'm **Resume Bot**, a virtual assistant designed to provide insights into Harikrishna (Harry) Dev's background and qualifications.

Feel free to inquire about any aspect of Hari's profile, such as his educational journey, internships, professional projects, areas of expertise in data science and AI, or his future goals.

- His Master's in Business Analytics with a focus on Data Science from UTD

- His track record in roles at companies like Daifuku, Flipkart, and Walmart

- His proficiency in programming languages, ML frameworks, data visualization, and cloud platforms

- His passion for leveraging transformative technologies to drive innovation and positive societal impact

What would you like to know first? I'm ready to answer your questions in detail.

"""

message_placeholder.markdown(welcome_message)

if 'history' not in st.session_state:

st.session_state['history'] = []

# Display previous chat messages

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# Process user input and display bot response

if prompt := st.chat_input("Ask me about Hari"):

st.session_state.messages.append({"role": "user", "content": prompt})

with st.chat_message("user"):

user_input=prompt

st.markdown(prompt)

with st.chat_message("assistant"):

message_placeholder = st.empty()

full_response = ""

full_response = conversational_chat(user_input)

message_placeholder.markdown(full_response)

st.session_state.messages.append({"role": "assistant", "content": full_response})将代码保存为 app.py 后,运行以下命令:

streamlit run app.py

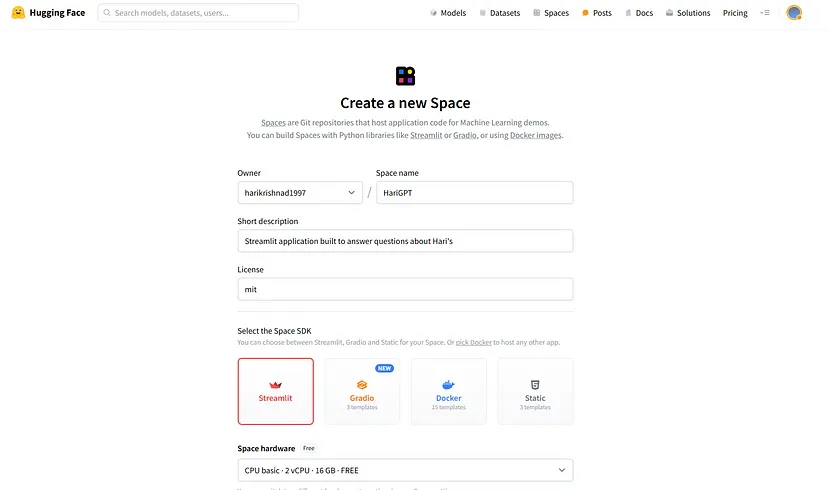

4、在 HuggingFace Spaces 上部署应用

首先创建一个新的 Streamlit Space。

前往HF Space,输入 Spaces 名称,然后选择 Streamlit SDK。

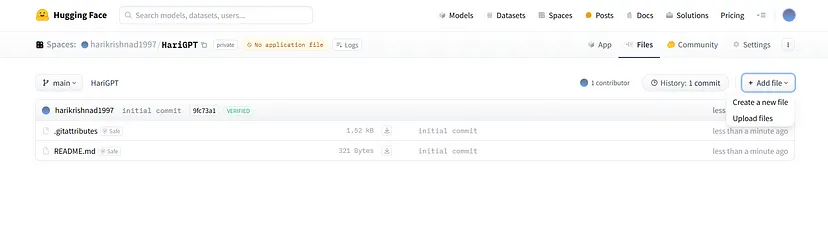

单击文件,然后单击添加文件 > 上传文件,并上传 app.py 和 requirements.txt。

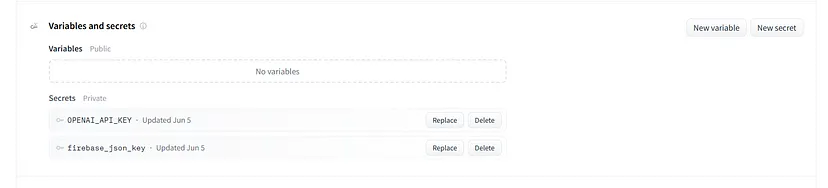

单击“设置”,然后在“变量和密钥”子部分中添加你的 OPENAI_API_KEY 和 firebase_json_key。密钥看起来类似于下面的代码块:

OPENAI_API_KEY = "#Enter OpenAI Key here"

firebase_json_key = {

"type": "service_account",

"project_id": "your_project_id",

"private_key_id": "your_private_key_id",

"private_key": "-----BEGIN PRIVATE KEY-----\nYour_Private_Key\n-----END PRIVATE KEY-----\n",

"client_email": "your_client_email@your_project_id.iam.gserviceaccount.com",

"client_id": "your_client_id",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/your_client_email%40your_project_id.iam.gserviceaccount.com"

}接下来你可以分享你的网站。

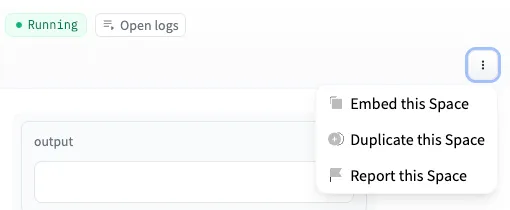

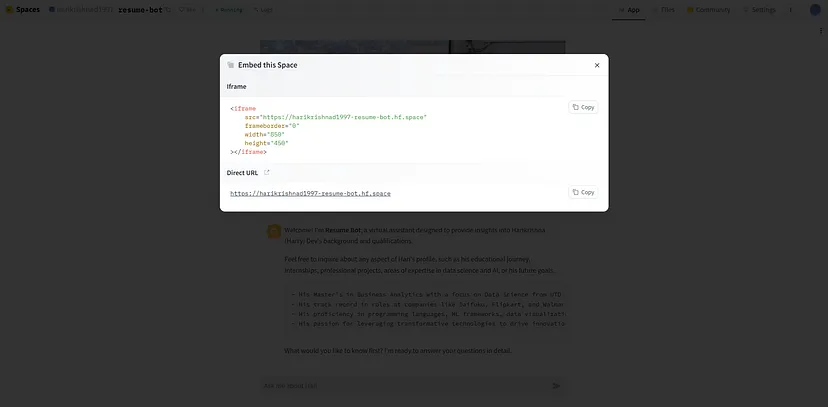

应用程序运行后,你可以嵌入网站或使用“嵌入此空间”选项分享链接。

5、结束语

从事实时项目将大大增强你对各种技术的理解。你将熟练掌握 LangChain 和 OpenAI API 等库,并熟练使用 Cloud Firestore 等 NoSQL 数据库。此外,你还在提示工程和检索增强生成 (RAG) 系统方面打下了坚实的基础。

原文链接:ResumeGPT: A RAG Based LLM Application

汇智网翻译整理,转载请标明出处