YOLO11车站黄线穿越检测

在本文中,我将展示如何利用人工智能创建一个智能视频监控系统,该系统能够检测到有人越过黄线,并立即发出警告,以提高乘客的安全。

熟悉的车站公告回响着:“注意,等火车时禁止越过黄线。”然而,尽管有这样的警告,心不在焉的人经常走得太近—甚至越过—黄线,甚至在火车到达之前就将他们的生命置于严重危险之中。在本文中,我将展示如何利用人工智能创建一个智能视频监控系统,该系统能够检测到有人越过黄线,并立即发出警告,以提高乘客的安全。

故事是这样组织的:

- 第 1 节讨论了如何应用 YOLO11 来检测和跟踪接近车站站台的人。

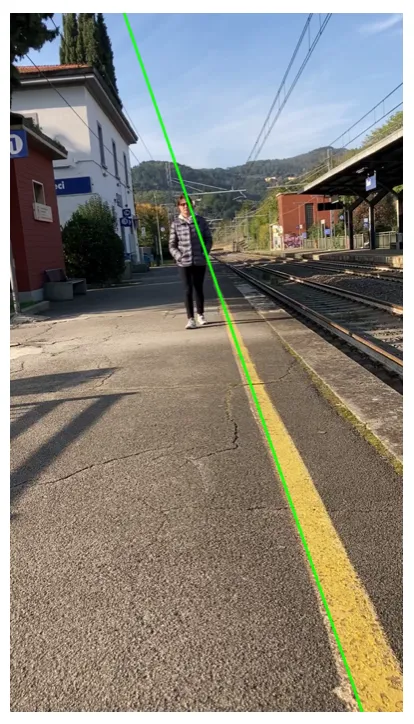

- 第 2 节展示了如何隔离站台附近的黄线,并使用 OpenCV 的 Houng 变换找到相应的方程。

- 在第 3 节中,结合前面的技术,构建了一个人工智能系统,用于拦截等待火车时越过黄线的人。系统逐帧识别人何时越过黄线,并对危及生命的情况发出警报。

- 在实际操作中,应用程序会在黄线后面的人周围绘制一个绿色边界框。一旦有人越过边界框,边界框就会变成红色。在实际部署中,这种检测可能会触发声音警报或语音警报以警告危险。

重现这些实验的代码可在我的 Github 页面上找到。

YOLO11 和人员跟踪

在这个实验中,我将使用最新版本的 YOLO,即 YOLO11,它提供了检测和跟踪人员的不同方法。

1、物体检测模型

物体检测侧重于识别图像或视频流中物体的位置和类别。结果如何?一组围绕检测到的物体的边界框,与类标签和置信度分数配对,表示每个分类的可能性。当你需要发现感兴趣的物体(例如本例中靠近铁轨的人)而不需要精确了解其确切形状或位置时,它是理想的解决方案。YOLO11 提供了五个专为物体检测而设计的预训练模型。

在下面的脚本中,我们将使用最小的模型 yolo11n.pt 来识别图像中的人物并生成他们的边界框。通过使用 plot() 函数,ultralytics 库可以方便地通过直接在图像上绘制边界框来可视化检测到的个体。

from ultralytics import YOLO

import argparse

import cv2

if __name__ == '__main__':

'''

Apply bbox to detected persons

'''

parser = argparse.ArgumentParser()

parser.add_argument('--image_path', type=str, default="resources/images/frame_yellow_line_0.png")

opt = parser.parse_args()

image_path = opt.image_path

# Load a pretrained YOLO11n model

model = YOLO("yolo11n.pt")

image = cv2.imread(image_path)

results = model.predict(image)

# check that person has index name=0

print("results[0].names: ", results[0].names)

# iter over results. If there is only one frame then results has only one component

for image_pred in results:

class_names = image_pred.names

boxes = image_pred.boxes

# iter over the detected boxes and select thos of the person if exists

for box in boxes:

if class_names[int(box.cls)] == "person":

print("person")

print("person bbox: ", box.xyxy)

image_pred.plot()

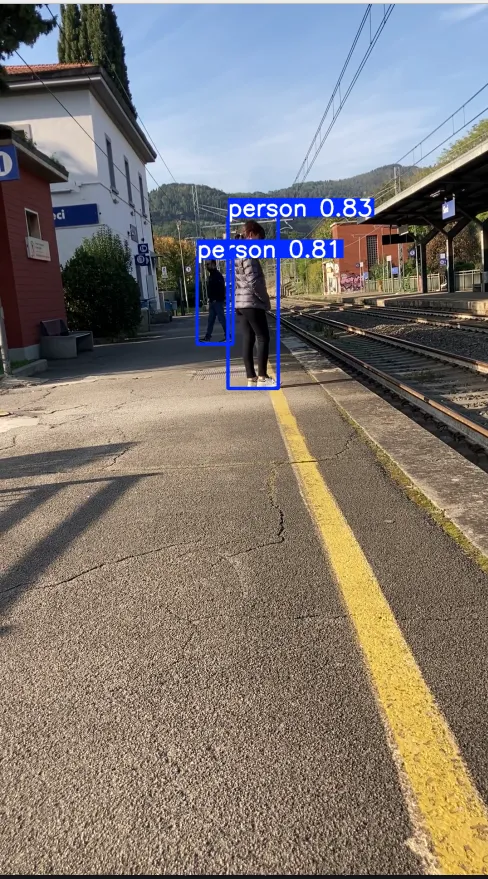

image_pred.show()拍摄一张有两个人的图像,输出如下:

使用相同的模型,我还可以提取每个人的框的坐标并使用 OpenCV 绘制相应的线条。使用以下脚本,我获得了与上一个类似的结果。绘制边界框的方法在第三部分中很有用。

from ultralytics import YOLO

import cv2

font = cv2.FONT_HERSHEY_DUPLEX

# Load a pretrained YOLO11n model

model = YOLO("yolo11n.pt")

path_image = "resources/images/frame_yellow_line_900.png"

image = cv2.imread(path_image)

annotated_frame = image.copy()

# set in the predict function the interested classes to detect. Here I want to detect persons, whose index is 0

results = model.predict(image, classes=[0], conf=0.54)

image_pred = results[0]

boxes = image_pred.boxes

# iter over all the detected boxes of persons

for box in boxes:

x1 = int(box.xyxy[0][0])

y1 = int(box.xyxy[0][1])

x2 = int(box.xyxy[0][2])

y2 = int(box.xyxy[0][3])

coords = (x1, y1 - 10)

text = "person"

print("x1: {} - y1: {} - x2: {} - y2: {}".format(x1, y1, x2, y2))

color = (0, 255, 0) # colors in BGR

thickness = 3

annotated_frame = cv2.rectangle(image, (x1, y1), (x2, y2), color, thickness)

annotated_frame = cv2.putText(annotated_frame, text, coords, font, 0.7, color, 2)

annotated_frame_path = "/home/enrico/Projects/VideoSurveillance/resources/images/annotated_frame_900.png"

cv2.imwrite(annotated_frame_path, annotated_frame)

2、姿势检测模型

在某些情况下,确定特定身体部位的位置至关重要。对于这些情况,YOLO11 提供了一套专门用于姿势估计的预训练模型。这些模型输出一系列关键点,代表图像中物体上的关键点。对于每个人,YOLO11 识别出 17 个关键点,这些关键点策略性地分布在身体上。

在下面的脚本中,我演示了如何为图像中的多个人提取这些关键点。YOLO11 支持五种预训练的姿势估计模型。在这种情况下,由于有些人可能看起来离相机很远,我使用了更强大的模型 yolo11m-pose.pt。此外,使用为每个个体提取的关键点,可以构建一个围绕人的边界框。这是通过从关键点中获取 x 和 y 坐标的最小值和最大值并将它们连接起来形成一个包围人的矩形来实现的。

from ultralytics import YOLO

import cv2

font = cv2.FONT_HERSHEY_DUPLEX

# Load a pretrained YOLO11n-pose Pose model

model = YOLO("yolo11m-pose.pt")

# Run inference on an image

path_image = "resources/images/frame_yellow_line_900.png"

image = cv2.imread(path_image)

annotated_frame_keypoints = image.copy()

annotated_frame_bbox = image.copy()

results = model(image) # results list

# extract keypoints

keypoints = results[0].keypoints

conf = keypoints.conf

xy = keypoints.xy

print(xy.shape) # (N, K, 2) where N is the number of person detected

print("Detected person: ", xy.shape[0])

# iter over persons

for idx_person in range(xy.shape[0]):

print("idx_person: ", idx_person)

#iter over keypoints of a fixed person

list_x = []

list_y = []

for i, th in enumerate(xy[idx_person]):

x = int(th[0])

y = int(th[1])

if x !=0.0 and y!=0.0:

list_x.append(x)

list_y.append(y)

print("x: {} - y: {}".format(x, y))

annotated_frame_keypoints = cv2.circle(annotated_frame_keypoints, (x,y), radius=3, color=(0, 0, 255), thickness=-1)

annotated_frame_keypoints = cv2.putText(annotated_frame_keypoints, str(i), (x, y-5), font, 0.7, (0, 0, 255), 2)

if len(list_x) > 0 and len(list_y) > 0:

min_x = min(list_x)

max_x = max(list_x)

min_y = min(list_y)

max_y = max(list_y)

print("min_x: {} - max_x: {} - min_y: {} - max_y: {}".format(min_x, max_x, min_y, max_y))

w = max_x - min_x

h = max_y - min_y

dx = int(w/3)

x0 = min_x - dx

x1 = max_x + dx

y0 = min_y - dx

y1 = max_y + dx

print("x0: {} - x1: {} - y0: {} - y1: {}".format(x0, x1, y0, y1))

coords = (x0, y0 - 10)

text = "person"

color = (0, 255, 0) # colors in BGR

thickness = 3

annotated_frame_bbox = cv2.rectangle(annotated_frame_bbox, (x0, y0), (x1, y1), color, thickness)

annotated_frame_bbox = cv2.putText(annotated_frame_bbox, text, coords, font, 0.7, color, 2)

annotated_frame_path = "/home/enrico/Projects/VideoSurveillance/resources/images/annotated_frame_keypoints_900.png"

cv2.imwrite(annotated_frame_path, annotated_frame_keypoints)

annotated_frame_bbox_path = "/home/enrico/Projects/VideoSurveillance/resources/images/annotated_frame_keypoints_bbox_900.png"

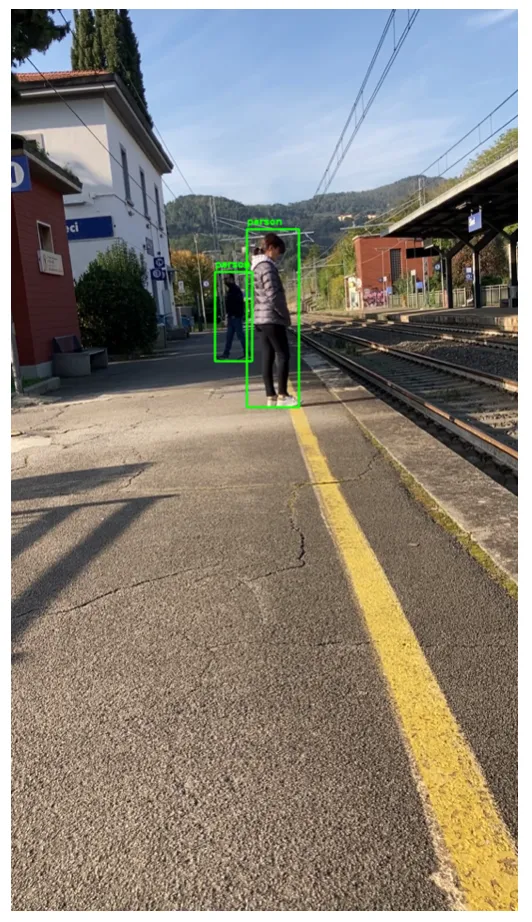

cv2.imwrite(annotated_frame_bbox_path, annotated_frame_bbox)在图 3 中,我展示了将脚本应用于同一图像的结果。每个人的关键点从 0 到 16。如果未检测到某些关键点,系统不会产生错误;它只是将它们从输出图像中省略。对于边界框,我们可以发现与对象检测模型相比存在微小的差异,这主要是因为关键点位于人体内。

3、通过霍夫变换进行黄线检测

霍夫变换是一种特征提取技术,可以检测图像中的线条。为了更深入地了解,您可以阅读这个帖子。

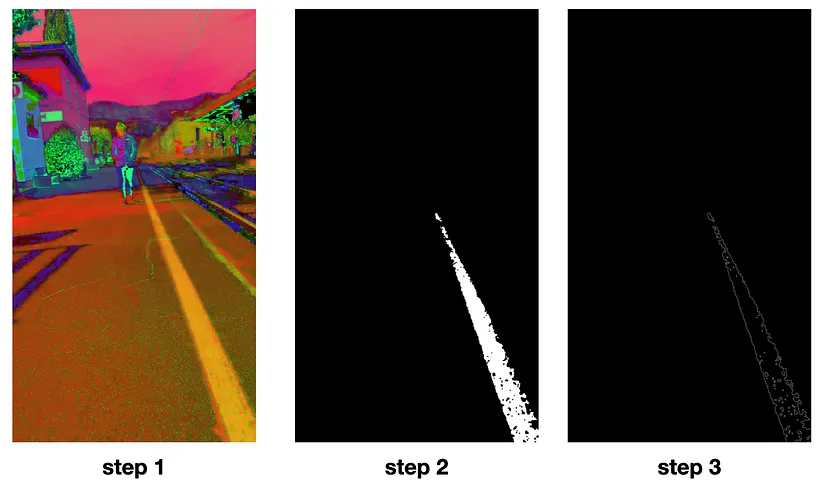

要检测平台附近的黄线并确定其线性方程,我们需要遵循以下步骤。首先,我们必须将黄线与图像的其余部分隔离开来。如果我们将图像从 BGR 颜色空间转换为 HSV(色调、饱和度、值)颜色空间,这项任务会更有效。转换使用以下代码行执行,结果输出如图 4-步骤 1 所示。

# transform the frame to hsv color space

frame_hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV第二步涉及定义最能涵盖黄线颜色的黄色色调范围。经过一些测试后,我找到了修正值。使用此范围,我隔离了指定黄色光谱内的像素,从而得到了黄线的蒙版,如图 4-步骤 2 所示:

# Set the min and max yellow in the HSV space

yellow_light=np.array([20,140,200],np.uint8)

yellow_dark=np.array([35,255,255],np.uint8)

# isolate the yellow line

mask_yellow=cv2.inRange(frame_hsv,yellow_light,yellow_dark)

kernel=np.ones((4,4),"uint8")

# Moprphological closure to fill the black spots in white regions

mask_yellow = cv2.morphologyEx(mask_yellow, cv2.MORPH_CLOSE, kernel)

# Moprphological opening to fill the white spots in black regions

mask_yellow = cv2.morphologyEx(mask_yellow, cv2.MORPH_OPEN, kernel)第三步是对步骤 2 中确定的掩模应用 Canny 边缘检测算法。这产生了如图 4-Step3 所示的边缘图像:

# find the edge of isolate yellow line

edges_yellow = cv2.Canny(mask_yellow,50,150)

我们准备使用概率霍夫变换函数来提取图像中所有可能的线段。语法是:

lines = cv2.HoughLinesP(image, rho, theta, threshold, minLineLength=None, maxLineGap=None)其中:

image:输入的二进制灰度图像。在我们的应用中,它是通过 Canny 算法提取边缘的图像。rho:累加器在距离维度上的分辨率(以像素为单位)。这决定了从线到原点(中心)的距离的精度。值越小,精度越高。theta:累加器在角度维度(以弧度为单位)中的分辨率。它定义了线的角度量化的精细程度。threshold:阈值。将一条线视为有效所需的最小投票数(霍夫累加器中的交点)。值越高,检测越严格。minLineLength:被视为有效的线段的最小长度。短于此的线段将被丢弃。maxLineGap:将两个线段连接成一条线的最大间隙(以像素为单位)。这控制如何处理同一条线的断开部分。

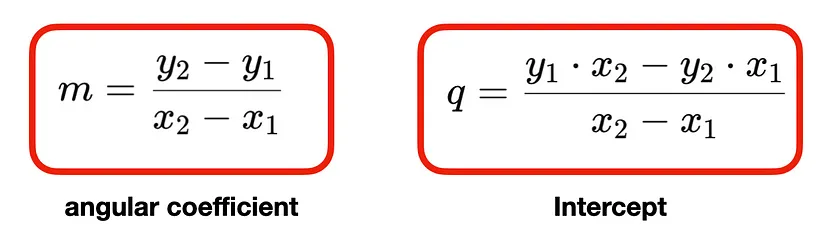

HoughLinesP 函数输出检测到的线段数组,每个线段由其端点定义。具体而言,每个线段表示为 [x1, y1, x2, y2],其中 (x1, y1) 和 (x2, y2) 是端点的坐标。使用这些坐标,我们可以使用以下公式计算相应直线的参数,即斜率(角度系数)和 y 截距:

需要注意的是,对于垂直线,角度系数没有定义,因为 x1=x2 且分母为零。在这种情况下,直线由方程 x = x1 表示,即 x 的值对于直线的所有点都是相同的。我在以下脚本中应用了 HoughLinesP 函数以及斜率和 y 截距公式。使用不同的阈值、 minLineLength 和 maxLineGap 值,我找到了那些值,对于这些值,我只能获得一条黄线的直线。

import cv2

import numpy as np

def find_slope(x1, y1, x2, y2):

if x2 != x1:

return ((y2 - y1) / (x2 - x1))

else:

return np.inf

def find_m_and_q(edges):

lines = cv2.HoughLinesP(

image=edges, # Input edge image

rho=1, # Distance resolution in pixels

theta=np.pi/180, # Angle resolution in radians

threshold=120, # Min number of votes for valid line

minLineLength=80, # Min allowed length of line

maxLineGap=10 # Max allowed gap between line for joining them

)

coefficient_list = []

# Iterate over points

if lines is None:

# if line is None return an empty list of coefficients

return coefficient_list

else:

for points in lines:

x_vertical = None

# Extracted points nested in the list

x1,y1,x2,y2=points[0]

slope = find_slope(x1, y1, x2, y2)

if slope == np.inf:

# if the slope is infinity the intercept is None and set the x vertical

intercept = None

x_vertical = x1

else:

intercept = y1-(x1*(y2-y1)/(x2-x1))

coefficient_list.append((slope, intercept, x_vertical))

print("coefficient_list: ", coefficient_list)

return coefficient_list

def draw_lines(image, list_coefficient):

image_line = image.copy()

h, w = image_line.shape[:2]

for coeff in list_coefficient:

m, q, x_v = coeff

y0 = 0

y1 = h

if m != np.inf:

x0 = -q/m

x1 = (h-q)/m

else:

x0 = x1 = x_v

cv2.line(image_line, (int(x0), int(y0)), (int(x1), int(y1)), (0, 255, 0), 6)

return image_line函数 draw_line() 用于绘制确定的直线,如图 5 所示:

4、穿越黄线检测

现在是时候将人员检测和黄线这两种技术结合起来了。为此,我考虑了一段在车站录制的视频,视频中一些人走在铁轨附近,越过了黄线。

由于摄像机是固定的,所以视频的每一帧中黄线的位置始终相同。因此,对于相应方程的评估,我只需考虑第一帧。在确定了黄线对应线的方程后,对于每一帧,我都会应用预先训练好的 YOLO11 模型检测所有可能的人。剩下的任务是确定一个人是否越过了黄线。

最简单的解决方案是检查一个人的边界框是否与线相交,但是,可能会出现与视角相关的问题:由于相机和人的位置,即使人实际上没有越过黄线,边界框也可能与线相交。实际上,人是用脚越过黄线的,因此有必要关注最靠近脚的点。在以下小节中,我将讨论两种可能的方法并分析各自的结果。在这两种情况下,如果人仍在黄线后面,则其边界框显示为绿色,当系统检测到未经授权的越线时,边界框变为红色。

这两个脚本都是在 Google Colab 上运行的,使用该服务提供的免费 GPU。为了避免耗尽可用内存,我缩小了视频的原始大小。

4.1 第一种方法:使用边界框的右下角

一种简单的解决方案是考虑边界框的右下角。如果这个点超出了黄线,则表明人已经越线。在下面的脚本中,我实现了第一次尝试解决问题。

import cv2

import numpy as np

import time

import os

import skvideo.io

font = cv2.FONT_HERSHEY_DUPLEX

import torch

import argparse

from ultralytics import YOLO

def find_slope(x1, y1, x2, y2):

if x2 != x1:

return ((y2 - y1) / (x2 - x1))

else:

return np.inf

def find_m_and_q(edges):

lines = cv2.HoughLinesP(

edges, # Input edge image

1, # Distance resolution in pixels

np.pi/180, # Angle resolution in radians

threshold=120, # Min number of votes for valid line

minLineLength=80, # Min allowed length of line

maxLineGap=10 # Max allowed gap between line for joining them

)

coefficient_list = []

# Iterate over points

if lines is None:

# if line is None return an empty list of coefficients

return coefficient_list

else:

for points in lines:

x_vertical = None

# Extracted points nested in the list

x1,y1,x2,y2=points[0]

slope = find_slope(x1, y1, x2, y2)

if slope == np.inf:

# if the slope is infinity the intercept is None and set the x vertical

intercept = None

x_vertical = x1

else:

intercept = y1-(x1*(y2-y1)/(x2-x1))

coefficient_list.append((slope, intercept, x_vertical))

print("coefficient_list: ", coefficient_list)

return coefficient_list

def find_yellow_line_parameter(image):

# Set the min and max yellow in the HSV space

yellow_light = np.array([20, 140, 200], np.uint8)

yellow_dark = np.array([35, 255, 255], np.uint8)

# transform the frame to hsv color space

frame_hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

# isolate the yellow line

mask_yellow = cv2.inRange(frame_hsv, yellow_light, yellow_dark)

kernel = np.ones((4, 4), "uint8")

# Moprphological closure to fill the black spots in white regions

mask_yellow = cv2.morphologyEx(mask_yellow, cv2.MORPH_CLOSE, kernel)

# Moprphological opening to fill the white spots in black regions

mask_yellow = cv2.morphologyEx(mask_yellow, cv2.MORPH_OPEN, kernel)

# find the edge of isolate yellow line

edges_yellow = cv2.Canny(mask_yellow, 50, 150)

# find slope and intercept of yellow line

coefficient_list = find_m_and_q(edges_yellow)

print("len(coefficient_list): ", len(coefficient_list))

return coefficient_list[0]

if __name__ == '__main__':

'''

Extract the first frame of a video

'''

parser = argparse.ArgumentParser()

parser.add_argument('--input_video_path', type=str, default="resources/videos/video_stazione.mp4")

parser.add_argument('--output_video_path', type=str, default="resources/videos/video_stazione_yolo_bbox.mp4")

opt = parser.parse_args()

input_video_path = opt.input_video_path

output_video_path = opt.output_video_path

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("device: {}".format(device))

# Load a pretrained YOLO11n model Bbox model

model_bbox = YOLO("yolo11n.pt")

model_bbox.to(device)

cap = cv2.VideoCapture(input_video_path)

# Loop through the video frames

frames_list = []

count = 0

yellow_light = np.array([20, 140, 200], np.uint8)

yellow_dark = np.array([35, 255, 255], np.uint8)

m, q, x_v = None, None, None

start_time = time.time()

while cap.isOpened():

# Read a frame from the video

success, frame = cap.read()

if success:

# find the parameters of the yellow straight line just for the first frame

if count==0:

m, q, x_v = find_yellow_line_parameter(frame)

# Run YOLO inference on the frame. Set in the predict function the interested classes to detect. Here I want to detect persons, whose index is 0

results = model_bbox.predict(frame, classes=[0], conf=0.5, device=device) # 0 is person

image_pred = results[0] # 0 because has been processed just one frame

boxes = image_pred.boxes

# iter over boxes

for box in boxes:

x1 = int(box.xyxy[0][0])

y1 = int(box.xyxy[0][1])

x2 = int(box.xyxy[0][2])

y2 = int(box.xyxy[0][3])

coords = (x1, y1-10)

text = "persona"

# check if the point (x2, y2) is over the line

if m is not None and q is not None:

is_over = False

if m == np.inf:

if x2 > x_v:

is_over = True

else:

x2_yellow = (y2 - q)/m

if x2 > x2_yellow:

is_over = True

if is_over:

color = (0, 0, 255) # colors in BGR

else:

color = (0, 255, 0) # colors in BGR

thickness = 2

frame = cv2.rectangle(frame, (x1, y1), (x2, y2), color, thickness)

frame = cv2.putText(frame, text, coords, font, 0.7, color, 2)

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# resize the frame because of memory

# print("frame.shape: ", frame.shape) -> (1920, 1080, 3)

new_h = 990

aspect_ratio = frame.shape[1]/frame.shape[0]

new_w = int(aspect_ratio*new_h)

frame = cv2.resize(frame, (new_w, new_h))

# append the new frame

frames_list.append(frame)

count += 1

else:

# Break the loop if the end of the video is reached

break

print("count: ", count)

end_time = time.time()

print("Elaboration time: ", end_time-start_time)

out_video = np.array(frames_list)

out_video = out_video.astype(np.uint8)

print("out_video.shape: ", out_video.shape)

# skvideo lavora in rgb, quindi i frames devono essere in rgb

skvideo.io.vwrite(output_video_path,

out_video,

inputdict={'-r': str(int(30)),})通过将脚本应用到原始视频中,我跟踪了靠近平台的人,并根据他们是否越过黄线(红色边界框)或没有越过黄线(绿色边界框)来为其边界框着色。

4.2 第二种方法:使用脚部关键点

以前的解决方案并不完全准确。例如,如果该人伸展手臂,边界框的右下角可能会靠近黄线 - 甚至越过黄线 - 但实际上人并没有越过黄线。更精确的解决方案是使用脚部关键点。当一只脚或两只脚的关键点超出黄线时,可以断定该人确实越过了黄线。在下面的脚本中,我实现了这种更准确的尝试来解决问题。一旦检测到某个人的关键点,我就会关注那些与其脚相关的关键点,即索引为 15 和 16 的关键点。

import cv2

import numpy as np

import time

import os

import skvideo.io

font = cv2.FONT_HERSHEY_DUPLEX

import torch

import argparse

from ultralytics import YOLO

def find_slope(x1, y1, x2, y2):

if x2 != x1:

return ((y2 - y1) / (x2 - x1))

else:

return np.inf

def find_m_and_q(edges):

lines = cv2.HoughLinesP(

edges, # Input edge image

1, # Distance resolution in pixels

np.pi/180, # Angle resolution in radians

threshold=120, # Min number of votes for valid line

minLineLength=80, # Min allowed length of line

maxLineGap=10 # Max allowed gap between line for joining them

)

coefficient_list = []

# Iterate over points

if lines is None:

# if line is None return an empty list of coefficients

return coefficient_list

else:

for points in lines:

x_vertical = None

# Extracted points nested in the list

x1,y1,x2,y2=points[0]

slope = find_slope(x1, y1, x2, y2)

if slope == np.inf:

# if the slope is infinity the intercept is None and set the x vertical

intercept = None

x_vertical = x1

else:

intercept = y1-(x1*(y2-y1)/(x2-x1))

coefficient_list.append((slope, intercept, x_vertical))

print("coefficient_list: ", coefficient_list)

return coefficient_list

def find_yellow_line_parameter(image):

# Set the min and max yellow in the HSV space

yellow_light = np.array([20, 140, 200], np.uint8)

yellow_dark = np.array([35, 255, 255], np.uint8)

# transform the frame to hsv color space

frame_hsv = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

# isolate the yellow line

mask_yellow = cv2.inRange(frame_hsv, yellow_light, yellow_dark)

kernel = np.ones((4, 4), "uint8")

# Moprphological closure to fill the black spots in white regions

mask_yellow = cv2.morphologyEx(mask_yellow, cv2.MORPH_CLOSE, kernel)

# Moprphological opening to fill the white spots in black regions

mask_yellow = cv2.morphologyEx(mask_yellow, cv2.MORPH_OPEN, kernel)

# find the edge of isolate yellow line

edges_yellow = cv2.Canny(mask_yellow, 50, 150)

# find slope and intercept of yellow line

coefficient_list = find_m_and_q(edges_yellow)

print("len(coefficient_list): ", len(coefficient_list))

return coefficient_list[0]

def check_crossing_yellow_line(m, q, x_v, x, y):

is_over = False

if m != np.inf:

x_yellow = (y-q)/m

if x > x_yellow:

is_over = True

else:

if x > x_v:

is_over = True

return is_over

if __name__ == '__main__':

'''

Extract the first frame of a video

'''

parser = argparse.ArgumentParser()

parser.add_argument('--input_video_path', type=str, default="resources/videos/video_stazione.mp4")

parser.add_argument('--output_video_path', type=str, default="resources/videos/video_stazione_yolo_keypoints.mp4")

opt = parser.parse_args()

input_video_path = opt.input_video_path

output_video_path = opt.output_video_path

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("device: {}".format(device))

# Load a pretrained YOLO11n model Bbox model

model_keypoints = YOLO("yolo11m-pose.pt")

model_keypoints.to(device)

cap = cv2.VideoCapture(input_video_path)

# Loop through the video frames

frames_list = []

count = 0

yellow_light = np.array([20, 140, 200], np.uint8)

yellow_dark = np.array([35, 255, 255], np.uint8)

m, q, x_v = None, None, None

start_time = time.time()

while cap.isOpened():

# Read a frame from the video

success, frame = cap.read()

if success:

# on the firts frame I set the parameters of the yellow straight line

if count==0:

m, q, x_v = find_yellow_line_parameter(frame)

# Run YOLO inference on the frame

#results = model_keypoints(image) # results list

results = model_keypoints.predict(frame, device=device)

keypoints = results[0].keypoints

xy = keypoints.xy

print("Detected person: ", xy.shape[0])

# iter over persons

for idx_person in range(xy.shape[0]):

# iter over keypoints of a fixed person to find the bbox and the 15 and 16 keypoints

list_x = []

list_y = []

x15 = None

y15 = None

x16 = None

y16 = None

for i, th in enumerate(xy[idx_person]):

x = int(th[0])

y = int(th[1])

if x != 0.0 and y != 0.0:

list_x.append(x)

list_y.append(y)

if i == 15:

x15 = x

y15 = y

elif i == 16:

x16 = x

y16 = y

# check the crossing of the yellow line

is_over = False

if x15 is not None and y15 is not None:

is_over = check_crossing_yellow_line(m, q, x_v, x15, y15)

if not is_over and x16 is not None and y16 is not None:

is_over = check_crossing_yellow_line(m, q, x_v, x16, y16)

# build the bbox of the person if the lists are not empty

if len(list_x) > 0 and len(list_y) > 0:

min_x = min(list_x)

max_x = max(list_x)

min_y = min(list_y)

max_y = max(list_y)

w = max_x - min_x

h = max_y - min_y

dx = int(w / 3)

x0 = min_x - dx

x1 = max_x + dx

y0 = min_y - dx

y1 = max_y + dx

coords = (x0, y0 - 10)

text = "person"

if is_over:

color = (0, 0, 255) # colors in BGR

else:

color = (0, 255, 0) # colors in BGR

thickness = 3

frame = cv2.rectangle(frame, (x0, y0), (x1, y1), color, thickness)

frame = cv2.putText(frame, text, coords, font, 0.7, color, 2)

print("idx_person: {} - is_over: {}".format(idx_person, is_over))

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# resize the frame because of memory

# print("frame.shape: ", frame.shape) -> (1920, 1080, 3)

new_h = 990

aspect_ratio = frame.shape[1]/frame.shape[0]

new_w = int(aspect_ratio*new_h)

frame = cv2.resize(frame, (new_w, new_h))

frames_list.append(frame)

count += 1

else:

# Break the loop if the end of the video is reached

break

print("count: ", count)

end_time = time.time()

print("Elaboration time: ", end_time-start_time)

out_video = np.array(frames_list)

out_video = out_video.astype(np.uint8)

print("out_video.shape: ", out_video.shape)

# skvideo lavora in rgb, quindi i frames devono essere in rgb

skvideo.io.vwrite(output_video_path,

out_video,

inputdict={'-r': str(int(30)),})使用关键点的方法,我获得了这个视频。

对于本演示中使用的测试视频,从这两种方法获得的结果似乎相似,因为相机已经得到适当固定,并且没有出现可能欺骗算法的透视现象。

在这两种情况下,结果都是正确的,质量也很好。在计算成本方面,我们还可以观察到:

- 人体检测模型

yolo11n.pt大约需要 10 毫秒才能对单个帧进行推理。总共在 47 秒内处理了 1650 帧。 - 关键点检测模型

yolo11m-pose.pt大约需要 15 毫秒才能对单个帧进行推理。总共在 54 秒内处理了 1650 帧。

汇智网翻译整理,转载请标明出处